At Mitigate, we utilise this Conceptual Model to construct Generative AI enterprise-ready systems.

We have been exploring ways to implement large language models into our client and partner organisations. However, for non-technical stakeholders, it can be challenging to grasp how all the components of generative AI fit together into a production-ready system.

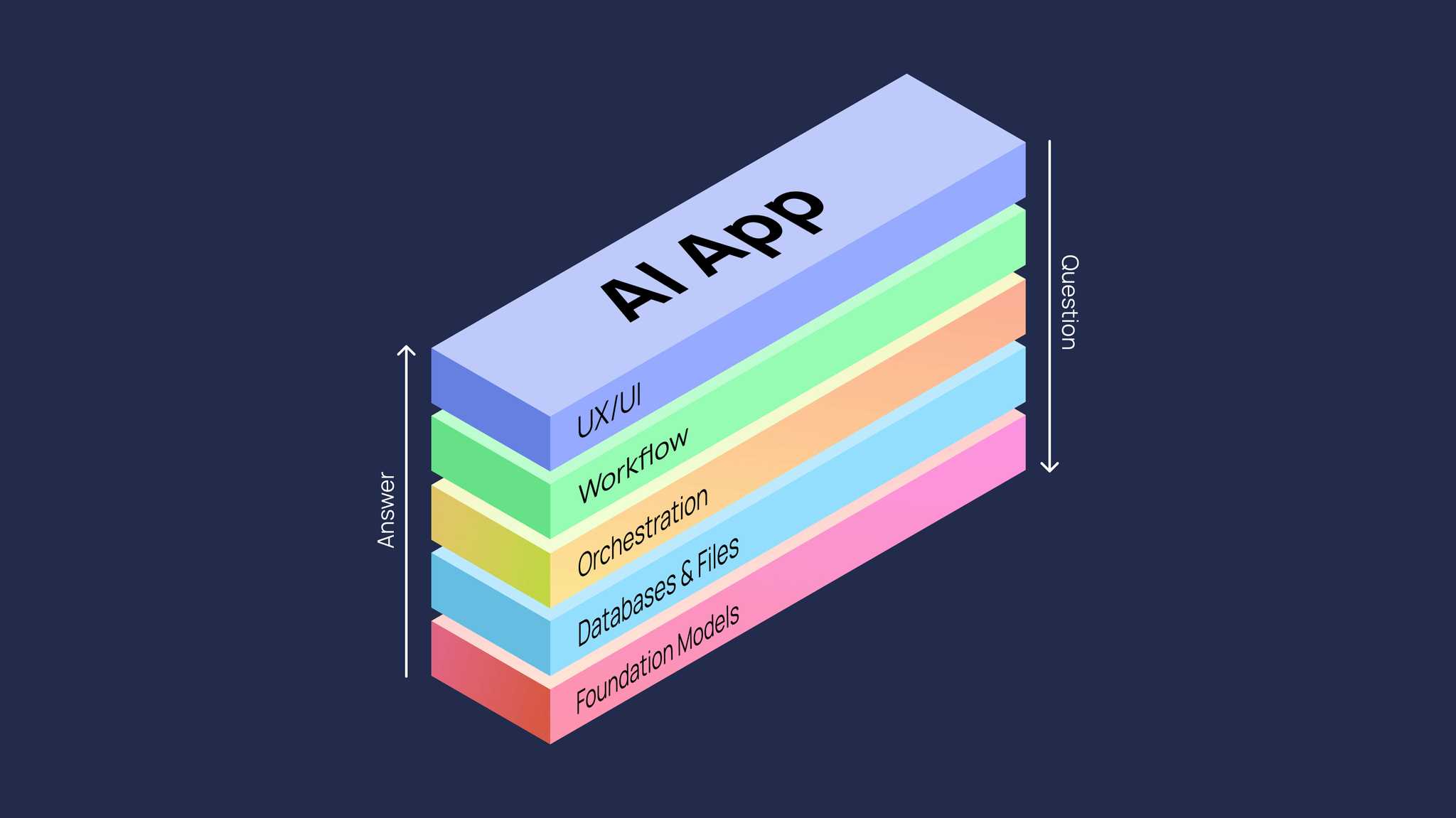

This article presents our Generative AI Tech Stack, a conceptual model that simplifies understanding of generative AI solutions' components. It starts with foundational language models and adds layers for data and service integration, capped by user applications for tailored experiences. This stack outlines the key elements of enterprise-grade generative AI, aiming to clarify its use as a business tool. It helps decisionmakers, CTOs and managers understand AI's potential and limitations, promoting informed discussions on its possibilities and ethical use in organisations.

The goal of this article is simple: to demystify how text AI, which may seem like magic, can be used as a practical business tool.

#1 Foundational Models: LLMs Underpin the Generative AI Revolution

The Generative AI Tech Stack begins with Large Language Models (LLMs) like GPT-4, trained on extensive datasets to produce human-like text. They are foundation models, essential for applications like chatbots and content generation. However, LLMs can sometimes generate inaccurate information, highlighting the need for more stack components.

LLMs can be fine-tuned with specific datasets for tasks like product queries or code generation. This customization balances between open-source models, which offer full control and privacy but require infrastructure, and closed-source models like GPT-4, which are easy to access but offer limited customization.

Organizations often use a hybrid approach, combining both types for innovation and control. This consideration is crucial when planning scalable AI solutions.

Some of the most popular foundation models: BERT, GPT, AI21 Jurassic, Claude.

#2 Database and Files Layer: Enable Contextual Responses

The Database/Knowledge Base layer is crucial in the Generative AI Tech Stack, because it provides AI in real-world data. Databases hold structured data like customer profiles and product information, enabling AI to generate relevant, personalised responses. For instance, a chatbot can suggest products based on a customer's purchase history. Or it can answer in questions about your colleagues or projects. Whatever you have in your databases and documents.

Knowledge bases supplement foundation models with facts, rules, and ontology, preventing inaccuracies. They require efficient vector similarity searches for linking concepts and retrieving context.

This layer ensures AI responses are fact-based and contextually accurate, enhancing their utility and reliability.

Some of the most popular vector databases: Pinecone, Chroma, Elastic, Milvus, Redis

#3 Orchestration Layer Combines Data and Services

The Orchestration Layer, positioned above the Database layer in the Generative AI Tech Stack, integrates data and services into AI contexts. It accesses databases and APIs in real-time, enhancing the AI's context with tailored information for relevant responses.

For example, an e-commerce tool might combine user browsing data, inventory, and pricing from different sources to recommend appropriate products. This layer also converts data like emails into vector formats suitable for AI and invokes external APIs for additional context, such as validation or business logic.

By managing data and service integration, the Orchestration Layer ensures personalised, context-aware responses from the AI system.

Some of the most popular frameworks: Langchain, LlamaIndex

#4 Workflow Layer: Creates Business Value

The Workflow Layer in the Generative AI Tech Stack designs AI pipelines for specific applications by assembling reusable elements. It incorporates best practices for security, accuracy, and error handling, creating reliable workflows.

For example, content moderation workflows might integrate steps like human validation and templated prompts to ensure adherence to guidelines. These workflows combine AI models and techniques for trusted, end-to-end processes.

Prompt engineers play a key role in this layer, crafting and refining workflows by combining various AI models and methods. They create logic flows that turn into reusable modules, which can be accessed from the Application layer above through API endpoints.

This layer transforms individual AI models into cohesive systems that address real-world production needs, making the whole system more robust and effective than its individual parts.

#5 UX / UI Layer: Brings AI to Everyday Users

The Application or User Experience (UX) layer is at the top of the Generative AI Tech Stack, focusing on integrating AI to create intuitive user interfaces. Initially, generative AI applications were text-based, but this layer expands to include voice, vision, and conversational UIs (chats).

Examples include applications with graphical interfaces, voice-activated prompts, and image recognition capabilities. Chatbots and conversational agents exemplify these natural UX designs, allowing users to interact as if they're speaking to a human.

The Application layer aims to make AI interactions seamless and intuitive, prioritising user-centered design to bridge the human-machine gap. By incorporating diverse interfaces, this layer unlocks the full potential of generative AI, making it accessible and beneficial for end users.

The model offers a foundational framework to understand generative AI architecture. However, it's an abstraction with limitations and should be used as a basis for discussion, not a rigid guideline. As practical experience grows, both the model and associated best practices will evolve.

Have a task for AI? Get in touch with us!